FRAD first phase development

FRAD

BeED FRAD Core (Face Recognition Artificial Detection) is a face emotion detection project based on Python.

Introduction

This project aims to classify the emotion on a person's face into one of seven categories, using deep convolutional neural networks. The model is trained on the FER-2013 dataset which was published on International Conference on Machine Learning (ICML). This dataset consists of 35887 grayscale, 48x48 sized face images with seven emotions - angry, disgusted, fearful, happy, neutral, sad and surprised.

Dataset

This dataset FER2013 is a csv file contains images in pixels format. This dataset has 35887 sample which have 7 categories of emotions: angry, disgusted, fearful, happy, neutral, sad and surprised.

This dataset has around half of total sample is use for training purpose, and another half of samples is used for validation purpose.

The purpose of training samples is used for generating the weight model. While the validation samples are the samples which verified and cross check against training sample while training in action.

Training

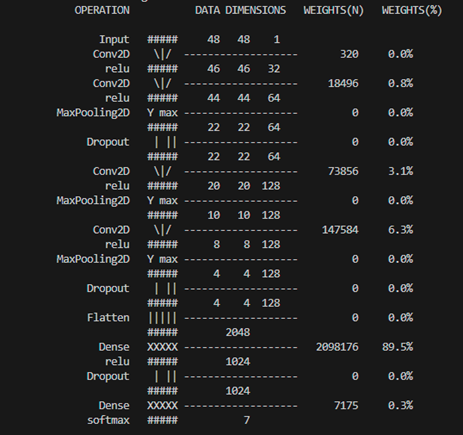

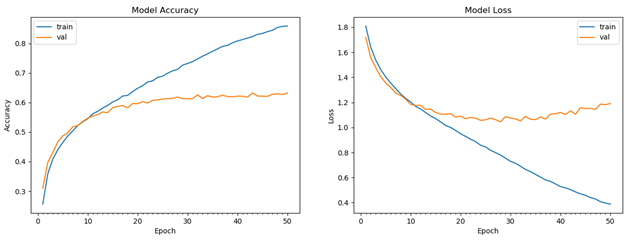

With a simple 4-layer CNN, which include Relu and Conv2D, SoftMax and dropout techniques, the CNN can produce the test with accuracy reached 63.2% in 50 epochs.

CNN (convolutional neural network) diagram

Model (model.h5)

After training is completed, a .h5 extension (aka model weight) file will be generated. The model file can is ready to be use on production. The file is lightweight as 9 megabytes only. This can be speed up the performance when running on real time face detection.

Testing

Sample Usage

1. Flask Api to batch process image to process image uploaded. And save data into database.

No Comments